Visual Search vs Text: What Converts in Fashion?

A decision-maker guide to when visual search beats text in fashion e‑commerce—and how to measure it.

When visual beats text—and when it doesn’t in fashion discovery

Shoppers rarely arrive knowing the exact fashion term for what they want. They come with a mental picture—an influencer’s look, a runway detail, or a color-and-silhouette vibe. That’s why visual search can feel like magic: snap or upload an image, and the catalog reshapes around the look. But does it actually convert better than a well-tuned text search? The answer is, it depends on intent, data quality, and UX.

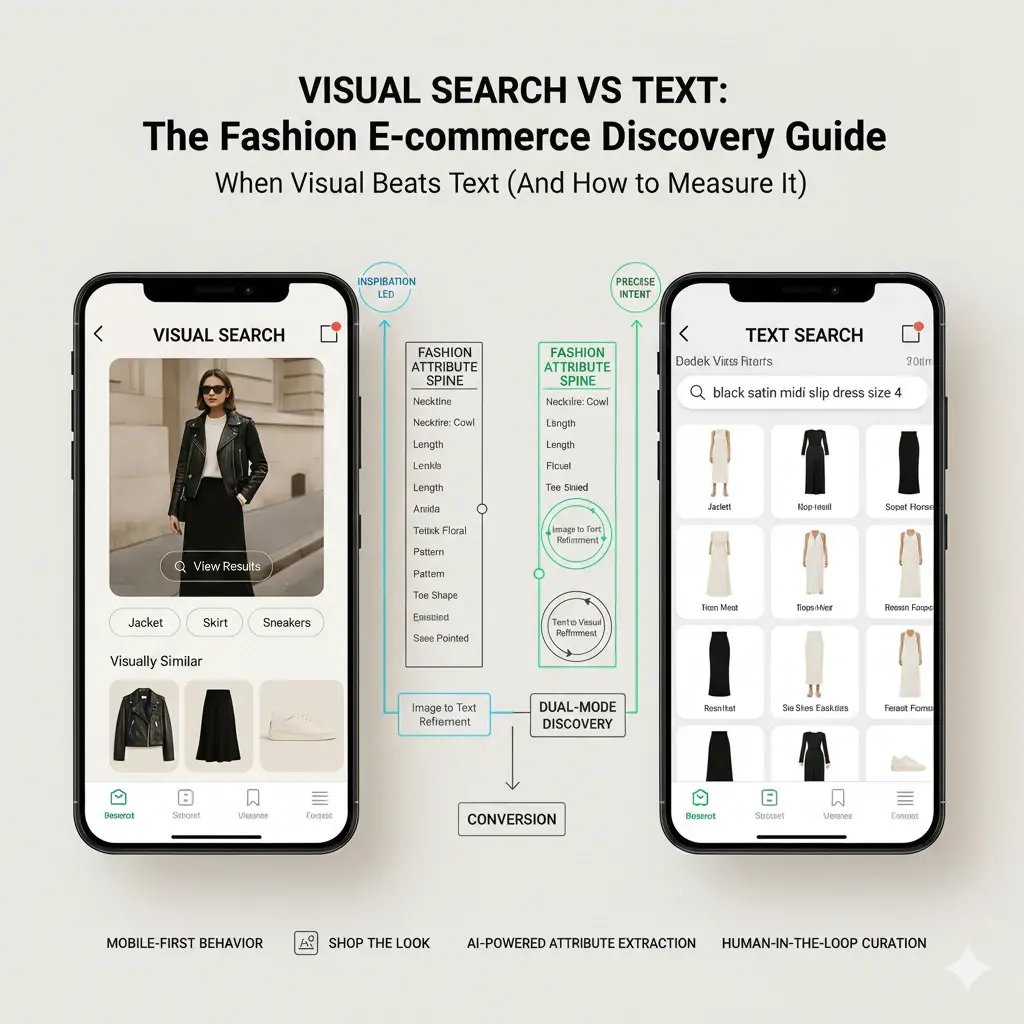

Visual search excels at inspiration-led journeys (social to shop) and ambiguous aesthetics (“that satin slip Kate wore”), while text search still wins for precise intents (“black satin midi slip dress size 4”).

The opportunity isn’t to crown a winner; it’s to help shoppers fluidly move between seeing and saying. In fashion, discovery fails when catalogs aren’t described the way people think.

Visual search without fashion attributes becomes a black box; text search without style vocabulary returns generic grids. Brands that win unify two layers: a fashion-grade attribute spine and a dual-mode discovery experience.

Computer vision should extract interpretable style features (neckline, strap width, length, toe shape, pattern family) that map to the same taxonomy merchandisers use. Text search should boost by those attributes and by trend context.

When both modes speak the same attribute language, shoppers can pivot: start from an image, then narrow with “cowl neckline” and “ankle length”; or start with text and refine visually.

Evidence points to growing visual-first behavior on mobile, particularly for Gen Z. Platforms popularized “shop the look,” and retailers who enable image-led discovery report stronger engagement on social traffic.

Pinterest’s Lens normalized camera-led search; see a general primer on visual discovery in commerce via Shopify’s fashion e‑commerce overview: Shopify. Meanwhile, trend-intelligence vendors show how image signals predict demand, underscoring the value of visual attributes in merchandising; for example, Heuritech explains tracking silhouettes and colors across social to inform buys.

The punchline: don’t ask “visual or text?” Ask “which intent, which moment, which device?” Then build a discovery system that routes gracefully: camera icon for visual, suggestive search for text, visible style facets, and outfit completion on PDP—all sharing the same attribute spine. That’s how inspiration becomes conversion.

Designing the fashion search stack: attributes, vision, and UX

Visual search only pays when it’s wired into a fashion-specific search stack and experience. Start by treating your product data model like a runway spec sheet, not a generic catalog.

Fashion discovery depends on attributes beyond category and color—silhouette, rise, sleeve length, neckline, wash, fabric composition and stretch %, heel/last shape for footwear, and occasion.

These attributes drive both text search (“black satin midi slip dress”) and how a vision model should cluster similarity. If the PDP doesn’t carry these fields consistently, both search modes underperform. Next, design a visual pipeline that mirrors how shoppers browse.

A shopper uploads or snapshots an inspiration image; a computer vision model extracts attributes (color family, pattern, neckline, sleeve, length, silhouette) and local features (stitching style, hardware).

Retrieval should rank in two ways: attribute match (for explainability: “similar neckline and satin finish”) and embedding similarity (for aesthetics). Keep retrieval boundaries tight: fetch only the minimal context—attributes, availability, price band, images—and never move full profiles into the CV service. This reduces latency and risk. Text search needs just as much love. In fashion, keyword queries are incomplete (“black dress”) and often ambiguous (“ballet flats” could include square or almond toes). Use fashion-aware synonym maps, attribute-aware indexing, and boosts by fit block or trend cohort.

Merchandisers should be able to tune ranking by campaign (e.g., elevate pleated skirts during a balletcore capsule) while guardrails keep results relevant. For an overview of fashion e‑commerce realities and why attribute depth matters, see Shopify. The UX must make trade-offs visible.

Pair a camera icon (visual search) with a smart, suggestive search box. When a shopper uses visual search, show top matches with concise reason codes (“matching neckline, satin finish”) and offer fast pivots: “wider strap,” “longer hem,” “less shine.” On the text side, auto-suggest style terms, surface visual facets (chips for necklines, lengths, toe shapes), and prefetch PDP snippets so results feel instantaneous.

Mobile is the proving ground; ensure one-hand interactions, large tap targets, and persistent filters. Gen Z skews visual and mobile-first; Business of Fashion and McKinsey continue to highlight social-led discovery shaping purchase journeys; see BoF x McKinsey. Finally, keep accessibility in view: alt text and contrast make the experience inclusive and improve SEO.

Measuring impact: KPIs, experiments, and ROI for discovery

Fashion teams don’t need another feature—they need proof it moves the P&L.

Start with a measurement plan that isolates discovery’s contribution to conversion and AOV.

Define a scoreboard before shipping: search-to-product-view rate (by mode), add-to-cart rate (by mode), first-result click share, dwell time, bounce rate, and filter engagement.

Segment by category (dresses, denim, sneakers) where style signals matter and where returns are costly. Run disciplined experiments.

Roll out visual search behind feature flags to a canary cohort. Use randomized control at the session or user level if possible; otherwise use matched cohorts with pre-registered stop-loss thresholds.

Expect the largest lift on inspiration-led traffic (social referrals) and on mobile. Attribute lift at the journey nodes: “visual search result → PDP → add to cart,” not at a channel level. Pair outcome KPIs with technical SLOs: latency under 300 ms P95 for results, low error rates, and image processing throughput.

If you can’t see it, you can’t scale it—trace from event to action with observability; leader-friendly primers on why this pays off are summarized by Splunk. Connect discovery to size/fit to reduce returns. When a shopper lands on a PDP via visual search, surface a size recommendation badge and an outfit completion strip tuned to their style profile. Returns in fashion often hinge on both style and fit; the combination mitigates “bracketing” behavior.

For industry context and macro trends shaping digital fashion, see the McKinsey State of Fashion. Finally, close the loop operationally. Give merchandisers a weekly report: which attributes most drive discovery, gaps where inspiration images map to out-of-stock variants, and trend signals (e.g., satin bias-cut slips rising). Feed this back into buy plans and content shoots. Keep privacy a performance feature—evaluate consent and preferences at activation, minimize PII in payloads, and log decisions for audit. That way, when the next trend spikes from TikTok to checkout, your search stack can meet the moment—visually and verbally.